Better Code, Better Science

Introducing a new open-source book project on scientific coding using AI tools

This post kicks off what I expect to be a long set of posts based on content from an open-source textbook that I am currently developing, tentatively titled “Better Code, Better Science”. The goal of the book is to help scientists learn to write code that is cleaner, more robust, and more reproducible. To make it digestible I’ll post a couple of sections at a time.

The entire book can be accessed here and the Github repository is here. This material is released under CC-BY-NC.

Introduction

There was a time when becoming a scientist meant developing and honing a set of very specific laboratory skills: a cell biologist would learn to culture cells and perform assays, a materials scientist would learn to use high-powered microscopes, and a sociologist would learn to develop surveys. Each might also perform data analysis during the course of their research, but in most cases this was done using software packages that allowed them to enter their data and specify their analyses using a graphical user interface. While many researchers knew how to program a computer, and for some fields it was necessary (e.g. to control recording instruments or run computer simulations), it was relatively uncommon for most scientists to spend a significant proportion of their day writing code.

How times have changed! In nearly every part of science today, working at the highest level requires the ability to write code. While the preferred languages differ between different fields of science, it is rare for a graduate student to make it through graduate school today without having to spend some time writing code. Whereas statistics classes before 2000 almost invariably taught the topic using statistical software packages with names that are quickly becoming forgotten (SPSS, JMP), most graduate-level statistics classes are now taught using programming languages such as R, Python, or Stata. The ubiquity of code has been accelerated even further by the increasing prevalence of machine learning techniques in science. These techniques, which bring unprecedented analytic power to scientists, can only be tapped effectively by researchers with substantial coding skills.

The increasing prevalence of coding in scientific practice contrasts starkly with the lack of training that most researchers receive in software engineering. By "software engineering" I don't mean introductory classes in how to code in a particular language. Rather, I am referring to the set of practices that have been developed within the field of computer science and engineering that aim to improve the quality and efficiency of the software development process and the resulting software products. A glimpse into this field can be gotten from examining the Software Engineering Body of Knowledge (SWEBOK), first published in 2004 and updated most recently in 2024. While much of SWEBOK focuses on topics that are primarily relevant to large commercial software projects, it also includes numerous sections that are relevant to anyone writing code that aims to function correctly, such as how to test code for validity and how to maintain software once it has been developed.

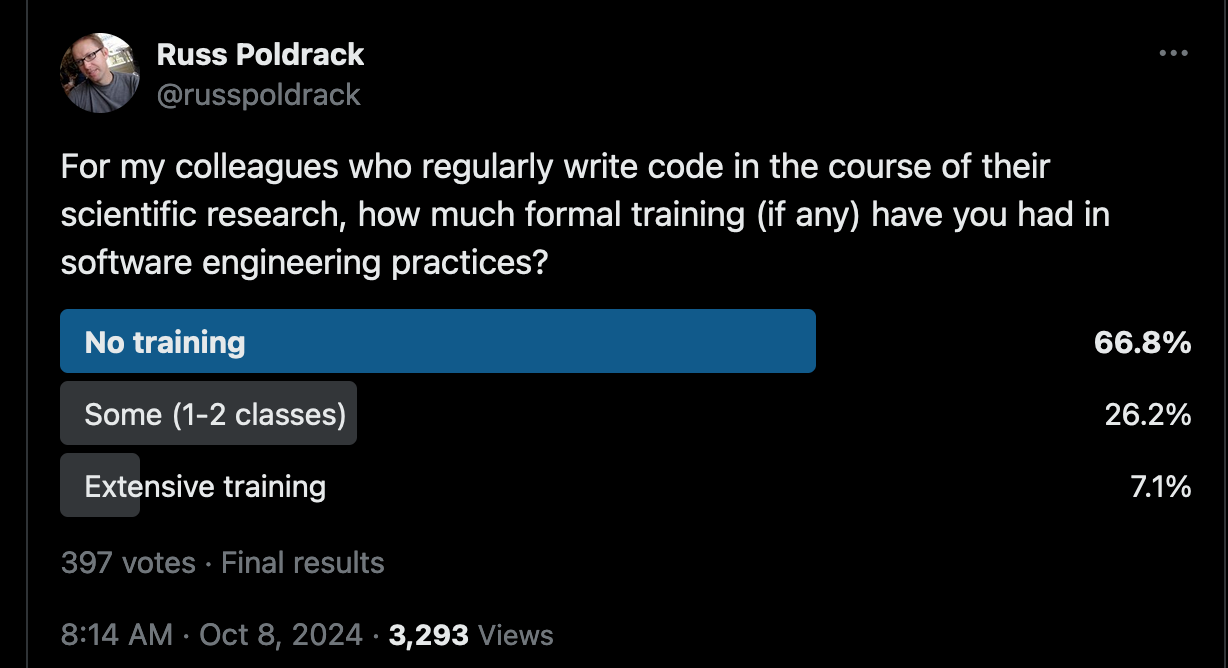

I’ve spent the last decade regularly giving talks on scientific coding practices. I often start by asking how many researchers in the audience have received software engineering training. In most audiences the proportion of people raising their hands is well below 1/4; this is true both for the audiences of neuroscientists and psychologists that I usually speak to, as well as researchers from other fields that I occasionally speak to. This impression is consistent with the results of a poll that I conducted on the social media platform X, which showed that the majority of scientists responded that they had received no training in software engineering:

Thus, a large number of scientists today are operating as amateur software engineers.

Why poor software engineering is a threat to science

There is no such thing as bug-free code, even in domains where it really matters. In 1999 a NASA space probe called the *Mars Climate Orbiter* was destroyed when measurements sent in the English unit of pound-seconds were mistakenly interpreted as being in the metric unit of newton-seconds, causing the spacecraft to be destroyed when it veered to close to the Martial atmosphere. The total amount lost on the project was over $300 million. Another NASA disaster occurred just a few months later when the *Mars Polar Lander* lost communication during its landing procedure on Mars. This is now thought to be due to software design errors rather than to a bug per se:

The cause of the communication loss is not known. However, the Failure Review Board concluded that the most likely cause of the mishap was a software error that incorrectly identified vibrations, caused by the deployment of the stowed legs, as surface touchdown. The resulting action by the spacecraft was the shutdown of the descent engines, while still likely 40 meters above the surface. Although it was known that leg deployment could create the false indication, the software's design instructions did not account for that eventuality. [Wikipedia].

Studies of error rates in computer programs have consistently shown that code generated by professional coders has an error rate of 1-2 errors per 100 lines of code (Symons & Horner, 2019). Interestingly, the most commonly reported type of error in the analyses reported by Symons and Horner was "Misunderstanding the specification"; that is, the problem was correctly described but the programmer incorrectly interpreted this description. Other common types of errors included numerical errors, logical errors, and memory management errors.

If professional coders make errors at a rate of 1-2 errors per hundred lines, it seems very likely that the error rates of amateur coders writing software for their research would be substantially higher. While not all coding errors will make a difference in the final calculation, it's likely that many will (Soergel, 2015). We have in fact experienced this within our own work (As described in this blog post). In 2020 we posted a preprint that criticized the design of a particular aspect of a large NIH-funded study, the Adolescent Brain Cognitive Development (ABCD) study. This dataset is shared with researchers, and we also made the code openly available via Github. The ABCD team eagerly reviewed our code, and discovered an error due to an overly complex indexing scheme with double negatives to that led to incorrect indexing of a data frame and thus changed the results. This fortunately happened while the paper was under review, so we were able to notify the journal and modify the paper to reflect the bug fix; if we had not made the code publicly available then the error would either have never been caught, or would have been caught after publication, leading to the need for a published correction.

This was a minor error, but there are also prominent examples of scientific claims that suffered catastrophic errors due to software errors. The best known is the case of Geoffrey Chang, a structural biologist who published several papers in the early 2000's examining the structure of a protein called the ABC transporter. Chang's group had to retract 5 papers, including 3 published in the prestigious journal *Science*, after learning that their custom analysis code had mistakenly flipped two columns of data, which ultimately led to an erroneous estimate of the protein structure (Miller, 2006). This is an example of how a very simple coding mistake can have major scientific consequences.

Is software development still important in the age of AI?

It would be an understatement to say that coding has undergone a revolution since the introduction of coding assistance tools based on artificial intellgence (AI) systems. While some degree of assistance has long been available (such as smart autocompletion by code editors), the introduction of Copilot by Github in 2021 and its subsequent incorporation into a number of integrated development environments (IDEs) brought a new level automation into the coding process. There are likely very few coders today who have not used these tools or at least tried them out. The presence of these tools has led to some breathless hand-wringing about whether AI will eliminate the need for programmers, but nearly all voices on this topic agree that programming will change drastically with the introduction of AI assistance but that the ability to program will remain a foundational skill for years to come.

In early 2023 the frenzy about AI reached the boiling point with the introduction of the GPT-4 language model by OpenAI. Analyses of early versions of this model (Bubeck, 2023) showed that its ability to solve computer programming problems was on par with human coders, and much better than the previous GPT-3 model. Later that year GPT-4 became available as part of the Github CoPilot AI assistant, and those of us using Copilot saw some quite astonishing improvements in the performance of the model compared to the GPT-3 version. I had been developing a workshop on software engineering practices for scientists, and the advent of this new tool led to some deep soul-searching about whether such training would even be necessary given the power of AI coding assistants. My colleagues and I (Poldrack, 2023) subsequently performed a set of analyses that asked three questions about the ability of GPT-4 to perform scientific coding. I will describe these experiments in more detail in a later post, but in short, they showed that while GPT-4 can solve many coding problems quite effectively, it was far from being able to solve common coding problems completely on its own. This situation has changed to some degree in the ensuing two years, with the advent of better language models and agentic coding tools such as Claude Code, but even with these tools one still needs to regularly debug problems and check to make sure that the code is doing what it is meant to do.

I believe that AI coding assistants have the potential to greatly improve the experience of coding and to help new coders learn how to code effectively. However, my experiences with AI-assisted coding have also led me to the conclusion that software engineering skills will remain *at least* as important in the future as they are now. First, and most importantly, the hardest problem in programming is not the generation of code; rather, it is the decomposition of the problem into a set of steps that can be used to generate code. It is no accident that the section of SWEBOK on "Computing Foundations" starts with the following:

1. Problem Solving Techniques

1.1. Definition of Problem Solving

1.2. Formulating the Real Problem

1.3. Analyze the Problem

1.4 Design a Solution Search Strategy

1.5. Problem Solving Using Programs

The motivation for why programming will remain as an essential skill even if code is no longer being written by humans was expressed by Robert Martin in 2009, well before the current AI tools were even imaginable:

...some have suggested that we are close to the end of code. That soon all code will be generated instead of written. That programmers simply won’t be needed because business people will generate programs from specifications. Nonsense! We will never be rid of code, because code represents the details of the requirements. At some level those details cannot be ignored or abstracted; they have to be specified. And specifying requirements in such detail that a machine can execute them is programming. Such a specification is code. (Martin, 2009)

For simple common problems AI tools may be able to generate a complete and accurate solution, but for the more complex problems that most scientists face, a combination of coding skills and domain knowledge will remain essential to figuring out how to decompose a problem and express it in a way that a computer can solve it. Even if that involves generating prompts for a generative AI model rather than generating code de novo, a deep understanding of the generated code will be essential to making sure that the code accurately solves the problem at hand.

Second, scientific coding requires an extra level of accuracy. Scientific research forms the basis for many important decisions in our society, from which medicines to prescribe to how effective a particular method for energy generation will be. The technical report for GPT-4 makes it clear that we should think twice about an unquestioning reliance upon AI models in these kinds of situations:

Care should be taken when using the outputs of GPT-4, particularly in contexts where reliability is important. (OpenAI, 2024)

The goal of this book will be to show you how to generate code that can reliably solve scientific problems while taking advantage of all of the positive benefits of AI coding assistants.

Next post: Why better code can mean better science