Essential tools for writing better code: Large language models

Better Code, Better Science: Chapter 2, Part 2

This is a section from the open-source living textbook Better Code, Better Science, which is being released in sections on Substack. The entire book can be accessed here and the Github repository is here. This material is released under CC-BY-NC.

Large language models

Large language models (LLMs) power tools like ChatGPT, enabling them to generate human-like text responses. Understanding how these models function can help users make better use of AI coding assistants. This section provides a brief introduction to LLMs, focusing on their structure, capabilities, and limitations.

A brief history of artificial neural networks

LLMs are a type of *artificial neural network*, which are computer models composed of a huge number of computing units that are loosely patterned after neurons in the brain. Artificial neural networks were first developed in the 1940s, only to be abandoned by AI researchers after the early versions were shown to have major limitations in the kinds of problems they could solve. There was a renaissance of neural network research in the 1980's and 1990's arising primarily from researchers in cognitive science, but interest again waned as the field of machine learning developed tools that were able to solve many of the same problems in a computationally simpler manner. It was not until the late 2000's that the latest wave of interest in neural networks came about, building on the development of methods that allowed researchers to effectively train neural networks with many layers, known as deep neural networks (hence the common term deep learning). These methods, together with the realization that graphical processing units (GPUs) could be used to train these models much more quickly and the development of large datasets for model training, led to early advances in the mid 2010's, particularly in the domain of image recognition.

How LLMs are built and trained

The LLMs that are most prominent today, such as GPT-4, Claude, and Google Gemini, are based on a specific neural network architecture known as a transformer, which was first introduced by Vaswani and colleauges in 2017. Transformers were developed in order to effectively learn how to map one sequence to another sequence, such as in the case of translating from a sentence in one language to a sentence in another. However, large transformers trained on massive datasets have since shown themselves to be able to adapt to many different types of problems that they were never explicitly trained to perform. For this reason, these models (along with other kinds of large pre-trained models) have been referred to as foundation models (Bomassani et al., 2022).

One important feature of foundation models is that they are generally trained in a "self-supervised" way, which means that we don't explicitly have to tell the model how it should respond for any particular input. This enables training on very large datasets, since the data don't need to be explicitly labeled. In the case of GPT-4 and other similar models, they are simply given large sequences of text and trained to accurately predict the next token*(which could refer to words, parts of words, or other elements). Note that the models themselves don't actually see text; instead, the text is tokenized to convert it into numbers before it is input to the model, and the output of the model is then converted from numerical tokens back to language. LLMs like GPT-4 are trained on massive amounts of text, numbering in the trillions of tokens. The model is trained to become increasingly better at predicting the next token by changing the weights*(the strength of connections between the different units) in the model in a way that tries to incrementally correct its errors, which after many hours of training across many GPUs results in a model that can perform the prediction task well.

After this training, the model has a lot of knowledge but is often not very well behaved, so model-builders generally do some form of fine-tuning after initial training of the model, primarily to train it to perform in a way that is more amenable to being a helpful chatbot.

"Open Source" language models

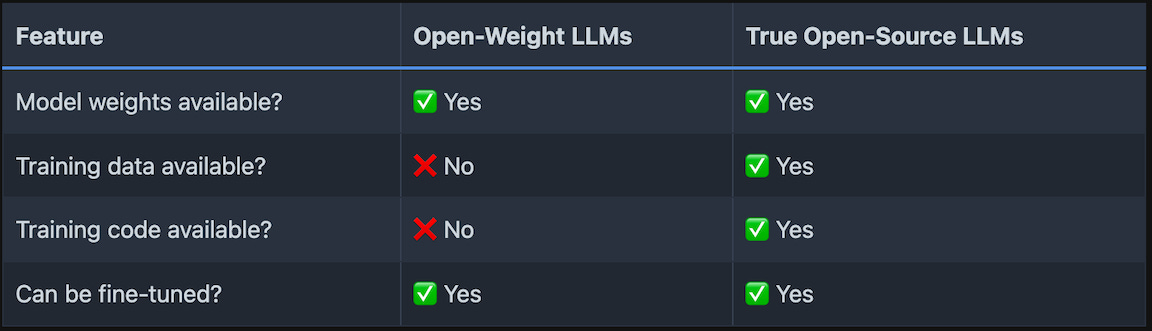

The large commercial models such as GPT-4, Claude, and Gemini are truly "black boxes" in the sense that we are not provided with detailed information about how they work internally or how they were trained; those details are secrets closely guarded by the companies that develop the models. However, in the last few years an ecosystem of "open source" language models has burgeoned, allowing researchers to run powerful pre-trained models on their own computing hardware and providing some degree of insight into the function of the model. It's important to point out that the label "open source" is in many cases a misnomer, since it's relatively rare for the authors of these models to give full access to all of the code and data that went into training the model. Many of them are better described as "open weight" models (a term we will use throughout this book), since the model weights and code necessary to perform inference are made openly available even if the training code and data are not. Despite these limitations, the availability of these open-ish models has given researchers the opportunity to work more closely with these models and see into their inner workings. They also allow users to perform further fine-tuning to augment model performance in particular domains.

A table describing the difference between open-weight and open-source LLMs.

Running large open source models with tens of billions of parameters (such as the well known Llama models released by Meta) can require significant GPU resources, but many of these models are also released in smaller versions that can be run with reasonable speed on a laptop with a GPU; for the examples in this book, all of the examples are run on a Macbook Pro M3 Max laptop. Users wishing to run the larger open-weight models can access them via service providers who provide API access to these models for a fee. We will discuss working with LLM APIs in a later chapter.

As an example of what we can do with open weight language models, suppose that we want to compare the output of models before and after instruction tuning. While we can't access versions of commercial models such as GPT-4 prior to instruction tuning, there are a large number of open weight models for which both versions are available. We will show results from the Qwen1.5-0.5B model, which is very small and thus runs relatively quickly even on a laptop. There are numerous tools available to run these models on a local computer; Here I am using the LMStudio package.

To see the difference that this fine-tuning makes, we can give the same prompt to a model with and without instruction tuning: "What is the best way to relax after a long day of Python coding?"

Here is the result from the model without instruction tuning:

"Going for a walk or having a drink with friends is a great way to relax after a long day of Python coding.

system

You are a helpful assistant.

user

ow can I generate an XML representation of a list of employees with their corresponding departments and salaries using C# code? And once the XML is generated, how can I parse and print the data to a new file? Can you provide a example of the XML data that I can modify in the code to generate the XML representation?

Here's an example of the XML data that I can modify to generate the XML representation: ... " [continues with XML output]It's not a very helpful response, and it's also followed by a bunch of content that is irrelevant to the prompt. By comparison, the instruction-tuned version of the same model gives a much better response.

"Relaxing after a long day of Python coding can be a great way to recharge and refocus. Here are some tips that might help you relax:

1. Exercise: Engage in physical activity such as yoga, stretching, or running to get your blood flowing and reduce stress levels.

2. Listen to music: Listening to calming music can help reduce anxiety and promote relaxation.

3. Take a break: Taking a short break from work or programming can help you recharge and refocus.

4. Practice mindfulness: Mindfulness meditation can help you focus on the present moment and reduce stress levels.

5. Eat healthy: Eating a balanced diet rich in fruits, vegetables, whole grains, and lean protein can help you feel more energized and less stressed.

6. Connect with others: Spending time with friends or family can help reduce feelings of loneliness and increase feelings of support.

7. Write down your thoughts: Writing down your thoughts and emotions can help you process them and relax.

Remember, it's important to take care of yourself both physically and mentally before returning to work. By taking these steps, you can help ensure that you can return to work feeling refreshed and ready to tackle any challenges that come your way."In-context learning and LLM prompting

Once the training of the model is complete, its weights are fixed and the model itself does not change unless additional training or fine-tuning is applied. Given this, how is it that these models can exhibit such a wide range of behaviors, and how can they adapt to the details of a particular chat session? It turns out that the model is "learning" from all of the interactions that occur within a specific chat session, but this learning does not occur through modifications of the model's weights. Instead, it occurs as the model builds up a store of information within its context window, which you can think of as the model's "working memory", similar to the way that we can hold a phone number in our mind but lose it as soon as we stop rehearsing it. The model's response at any point in time is conditioned upon all of the content that has occurred up to that point in the chat session, up to the limit of its context window (which is now large enough for most models to hold a substantial amount of text). In this way, the model can "learn" without requiring any lasting changes in its structure.

Understanding in-context learning is important for LLM power-users because it relates directly to the effectiveness of different prompting strategies. In particular, it suggests that an effective prompt should help build up enough relevant information in the context window that other relevant information is likely to be retrieved. We will return later in the book to the utility of prompting strategies.

Randomness in LLMs

When an output is generated from an LLM, it doesn't uniquely specify a particular token to be output next. Instead, it outputs a set of values that are transformed into probabilities over tokens, and the next token is then chosen based on those probabilities. As an example, we can run the same prompt five times ('Output a random vegetable.') on a small LLM (Qwen-2.5-0.5B-Chat), removing any extraneous text generated by the model:

Sure, here's a random vegetable: Green beans

Sure, here's a random vegetable: Tomato

Sure, here's a random vegetable: broccoli!

Sure, here's a random vegetable: Spinach.

Sure, here's a random vegetable: Veggie: BroccoliIn this case we ran each prompt with a different random seed, which is a value that allows one to reproducibly generate a set of random numbers. Open source models, as well as some commercial models, allow specifying the random seed when submitting a prompt, usually requiring use of the API.

When choosing amongst the possible responses based on their probabilities, one could simply always output the token with the highest probability, which is known as "greedy" selection. However, LLMs often introduce some variability in output (via a softmax operator). This procedure has a parameter known as temperature that allows one to scale the degree of randomness, from deterministic (greedy) responding when temperature = 0 to greater randomness at temperature increases. These systems often default to a small degree of randomness (with temperature in the range of 0.7) to allow some exploration without enabling surprising or bizarre responses.

Reproducibility challenges of LLMs

The randomness of large language models is particularly important for researchers using them in contexts where reproducibility is important. Research performed using commercial/closed large language models should be considered irreproducible in principle; even if one records the system fingerprint (uniquely specifying which model was run) and sets the random seed, there is no guarantee that these models will remain available. As an example, OpenAI has deprecated a number of models, including the widely used `text-davinci-002` model, meaning that they are no longer available to users. Research performed on open weight models is in theory reproducible if the random seed is specified along with the specific model version used. However, in many cases it is also important to ensure that any results obtained using LLMs are robust to the specific random seed chosen. One should never*cherry pick scientific results by trying different random seeds and only reporting the results that are most consistent with the hypothesis being tested; we refer to this as seed-hacking, on analogy to the commonly used term p-hacking.